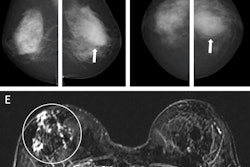

AI software assessed for its accuracy in reading mammograms performed less well than human readers by recording a higher recall rate and significantly more missed cancers, a team from a U.K. breast screening facility has found.

Dr. Shama Puri, Dr. Mark Bagnall, and Dr. Gabriella Erdelyi, consultant radiologists from the Breast Screening Unit at the University Hospital of Derby and Burton NHS Foundation Trust, have published their findings from a service evaluation project in an article posted on 16 March by Clinical Radiology.

The position of the UK National Screening Committee (UKNSC) is that the evidence for the accuracy of AI and its effects on outcomes in the whole screening pathway is not strong enough to justify its use in the National Health Service (NHS) Breast Screening Programme. Thus, a service evaluation project was undertaken to assess the clinical benefit of AI when used as a third reader and as a silent reader in a double-reading breast screening program and to evaluate the impact and feasibility of introducing it to the national program.

The Derby and Burton unit has a screening population of over 135,000 and was one of 14 breast screening sites in the U.K. to take part in the project, the authors noted.

Before the evaluation began, the AI software’s performance was checked and confirmed in a retrospective validation exercise using the unit’s data, with the AI provider proactively monitoring the tool throughout the study. For the evaluation itself, recent historical data of over 23,000 cases, including confirmed cancer cases, was extracted, anonymized, and used to calibrate and test thresholds for the AI product. The steering group and core project team held fortnightly meetings.

The AI product acted as a silent “third reader” in the background during the evaluation, the researchers explained, and it had no impact on the flow of screening or care. They noted cases in which the AI agreed with the radiologists as "concordant cases." Cases in which the AI product indicated recall but humans read it as routine were called "positive discordant cases"; these were flagged to be reviewed by a group of human readers. "Negative discordant cases" were those recalled by human readers but not by AI, and they were also flagged.

Key findings

A total of 9,547 cases were included in the evaluation during the period from December 2023 to March 2024. The AI and the human readers agreed in 86.7% of cases. The recall rate for AI was 13.6%; the human double reader recall rate (which included consensus discussion) was 3.2%. This included consensus discussion; the authors add that their unit's average recall rate before consensus ranges between 5% and 5.5%.

Positive discordant and negative discordant rates were 11.9% and 1.5%, respectively. Of the 1,135 positive discordant cases (flagged by AI but not by the human readers) reviewed, one patient was recalled for an area of perceived parenchymal distortion. The patient was subsequently assessed with CT, ultrasound, and clinical evaluation, all of which were normal. The authors noted that she remained healthy at the time of writing their article.

Of the 139 negative discordant cases (i.e., those flagged by human readers but not by AI) reviewed, the AI missed eight cancers recalled by human readers. Of those eight, seven had been flagged by both readers and one by one reader. With a total of 91 cancers detected over the evaluation period, this constituted a missed cancer rate of 8.79%.

Furthermore, when these results were analyzed, there was no consistency in the types of cancers missed, nor did the type of breast tissue -- dense, fatty, or mixed -- show a pattern.

“Performance of AI was inferior to human readers in our unit,” the authors wrote. “Having missed a significant number of cancers makes it unreliable and not safe to be used in clinical practice.”

Furthermore, they noted that the use of the AI tool at present would create more, rather than less, work for radiologists. With its high (and unreliable) rate of abnormal interpretation, the software would require human readers to monitor carefully and could lead to a significant increase in the number of consensus discussions required by human readers. This in turn might lead to unnecessary recalls.

“Our impression is that the software requires a lot of fine-tuning before considering its introduction in routine practice.”

Read the full study here.