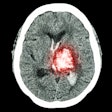

Deep-learning software can outperform a nondeep-learning method that's been used previously in computer-aided detection (CAD) software and may be able to help radiologists differentiate between prostate cancer and benign conditions on MRI, according to research presented this month at ECR 2017 in Vienna.

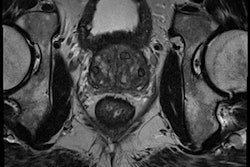

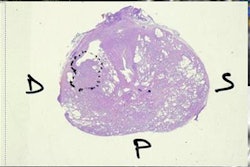

Investigators from Huazhong University of Science and Technology in Wuhan, China, and Yale University School of Medicine in the U.S. developed deep-learning software and compared its performance with a nondeep-learning method for differentiating prostate cancer from benign conditions such as benign prostatic hyperplasia (BPH) and prostatitis. In a study involving over 170 patients with over 2,600 prostate MR images, the group found the deep-learning software yielded significantly higher sensitivity and specificity.

"Deep learning can be used to assist radiologists to search for prostate cancer patients," said senior author and presenter Dr. Liang Wang.

Varied detection performance

Although detection of prostate cancer is critical for treatment selection and personalized management of these patients, MRI's performance for prostate cancer detection varies considerably, he noted. A recent meta-analysis found sensitivity for detecting prostate cancer on multiparametric MRI studies ranged from 66% to 81%, while specificity ranged from 82% to 92%. In addition, another literature review found sensitivity ranged from 76% to 96% and specificity varied from 23% to 87%.

To see if deep-learning technology might help, the researchers sought to compare the performance of deep convolutional neural networks with a nondeep-learning method called bag-of-words for fully automated classification of prostate cancer on MRI. The bag-of-word method represented the previous state-of-the-art for image recognition and analysis, and because the source code and implementation details were not available for prior commercial CAD algorithms, the team chose the bag-of-word method to provide a fair comparison with the deep-learning software.

Of the 172 patients included in the study, 79 had prostate cancer and 93 had benign conditions. The 93 benign cases included 75 with BPH and 18 with both BPH and prostatitis. The 172 patients had a total of 2,640 MR images.

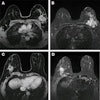

The prostate MRI exams were performed on a Magnetom Skyra 3-tesla whole-body MRI scanner (Siemens Healthineers) using syngo MR D13 software, an 18-element body matrix coil, and a 32-element spine matrix coil. The acquisition protocol included T1- and T2-weighted images, along with diffusion-weighted images and dynamic contrast enhancement.

The researchers utilized a workstation with two Titan X graphics processing units (Nvidia), an Intel Core i7 central processing unit with eight cores, and 32 GB of memory. The deep-learning software was based on the Caffe deep-learning framework developed by the Berkeley Vision and Learning Center in the U.S.; Python code was used for preparation, analysis, and visualization, he said. Patient images could be tested in less than half a second using the software, Wang said.

The team used data from variance analysis (ANOVA) to assess the difference in performance.

A significant difference

The researchers found the deep-learning method offered a statistically significant improvement in performance over the nondeep-learning approach in differentiating prostate cancer from nonprostate cancer.

| Performance of deep learning and nondeep learning methods in differentiating prostate cancer | ||

| Nondeep learning method | Deep-learning software | |

| Mean accuracy | 61% | 77.3% |

| Sensitivity | 53.2% | 69.2% |

| Specificity | 68.8% | 83.9% |

| Positive predictive value | 59.2% | 78.6% |

| Negative predictive value | 63.4% | 76.5% |

The difference between the two methods was statistically significant (p ≤ 0.05).

Wang acknowledged the limitations of their work, including its focus on utilizing only the morphological information in the images.

"The clinical use of added quantitative features from functional images remains to be investigated," he said.

However, prostate MR images with typical morphological information are beneficial for generating machine-learning methods, he added.