Collaborative AI-radiologist workflows have great potential to improve accuracy in cancer diagnoses, reducing both unnecessary biopsies and missed diagnoses. However, the integration of AI into diagnostic workflows requires careful assessment to ensure its safety, accuracy, and appropriate use, according to a new report posted on 7 March by European Radiology.

AI use in radiology workflows has increased rapidly, especially for analysis and decision support in diagnosis, note authors Prof. Anwar Padhani of the Paul Strickland Scanner Centre and Mount Vernon Cancer Centre, Northwood, U.K., and Nickolas Papanikolaou, PhD, head of the Champalimaud Foundation's Computational Clinical Imaging Group in Lisbon.

Its use can free up radiologists from automated tasks, as well as act as a second reader, detect anomalies that radiologists might miss, triage patients based on risk stratification, reduce time to reporting, and make workflows more efficient – all features that are especially valuable in understaffed settings.

However, these benefits also come with challenges, and they noted that not all AI tools are suitable for implementation into clinical workflow. AI tools can have automation bias, there is a risk of missed and delayed diagnoses or overdiagnosis, and lack of oversight can lead to ethical and safety issues.

The use of AI in collaborative workflow requires thorough research and the development of strategies to mitigate these issues, wrote Padhani and Papanikolaou.

They examined different types of AI-inclusive reading workflows used for prostate MRI (single-reader, early diagnostic setting) in their analysis, depending on how the AI tools were being used: standard of care, decision support, second reader, assisted targeted review, triage, high-confidence filtering, and standalone. They noted that for decision support, as a second reader, or for assisted targeted review, AI’s role is to augment the capabilities of the radiologist, flagging areas to be given further attention by the radiologist.

“The approach preserves clinical oversight while leveraging AI for complex pattern recognition,” the authors wrote about decision-support workflow. Likewise, they described AI used as a second reader as a “safety net,” noting that for these types of workflows, AI “reduces false negatives and improves sensitivity without replacing the clinical judgement of [multidisciplinary teams] regarding the need for biopsy.”

With triage, high-confidence filtering, and standalone workflows respectively, the researchers recommend caution, suggesting that the more autonomous the AI is, the more review is needed.

For AI systems triaging patients, they advise against automation bias, referring to the risk of missing cancer or delaying diagnosis in cases assessed as “low suspicion”; for high-confidence filtering, they mention “ethical considerations” in the potential for AI to make final decisions about some cases which could increase risk of missed or delayed diagnoses (or conversely, unnecessary biopsies). For both, they recommend the need for the systems to be carefully calibrated at highly sensitive and specific operating points, and equally carefully supervised.

Strong words

For standalone AI tools, in which AI systems review images independently or make biopsy or discharge decisions without review by radiologists, Padhani and Papanikolaou reserved their strongest conclusions. They noted that these systems are not recommended and call them a “moral hazard with significant concerns regarding accountability,” although they concede their economical nature and potential utility in settings where access to radiologists is severely limited or in advanced presentations.

Moreover, the authors noted that increased automation in AI systems requires increased performance metrics: “given reduced clinical oversight, autonomous workflows require near-perfect accuracy,” they wrote. In addition, it is critical that developers and regulators have a comprehensive understanding of those performance needs to ensure the clinical safety and efficacy of AI-assisted devices.

In their final recommendations, the authors called for prospective studies to focus on the safety and efficacy of these AI-based workflow tools within real-world clinical settings, and to assess them across diverse clinical settings and patient cohorts.

In addition, it is critical for developers to assess these tools with a focus on the radiologists who will use them: “By carefully considering the performance requirements and understanding the dynamics of human–AI interaction, radiologists can harness the power of AI-enabled devices to improve the accuracy and efficiency of prostate cancer diagnosis,” they concluded

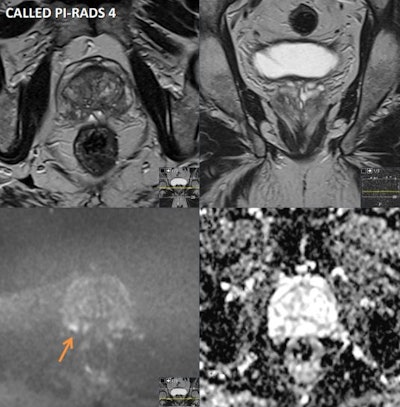

A 70-year-old asymptomatic man had a prostate-specific antigen (PSA) level of 2.67 in 2013, 3.13 in 2018, and 6.38 on August 2021. He had a Gleason Score of 3+4 pT3a, R1 left apex margin. Multiparametric MRI was performed on a 1.5 tesla scanner. Prostate volume was 33 cc and PSA density was 0.19. Peripheral zone (PZ): patchy intermediate signal bilaterally with a 6 mm focus of restricted diffusion and early enhancement in the right posterior base (see annotated images), PI-RADS 4 / LIKERT 4. Transition zone (TZ): no suspicious focal lesions. Extraprostatic extension: not evident. Seminal vesicles: normal. Lymph nodes: none enlarged. Bones: no suspicious focal lesions. Incidental finding: 12 mm polypoid lesion in the mid to lower rectum (annotated image) will require colorectal review and endoscopic correlation. Conclusion: small PI-RADS 4 lesion in the right posterior PZ will require a targeted biopsy. If malignancy is proven histologically, suggested radiological staging would be T2N0Mx. Figure courtesy of Prof. Anwar Padhani, from "AI - Human Workflows NoteBookLM" podcast.

A 70-year-old asymptomatic man had a prostate-specific antigen (PSA) level of 2.67 in 2013, 3.13 in 2018, and 6.38 on August 2021. He had a Gleason Score of 3+4 pT3a, R1 left apex margin. Multiparametric MRI was performed on a 1.5 tesla scanner. Prostate volume was 33 cc and PSA density was 0.19. Peripheral zone (PZ): patchy intermediate signal bilaterally with a 6 mm focus of restricted diffusion and early enhancement in the right posterior base (see annotated images), PI-RADS 4 / LIKERT 4. Transition zone (TZ): no suspicious focal lesions. Extraprostatic extension: not evident. Seminal vesicles: normal. Lymph nodes: none enlarged. Bones: no suspicious focal lesions. Incidental finding: 12 mm polypoid lesion in the mid to lower rectum (annotated image) will require colorectal review and endoscopic correlation. Conclusion: small PI-RADS 4 lesion in the right posterior PZ will require a targeted biopsy. If malignancy is proven histologically, suggested radiological staging would be T2N0Mx. Figure courtesy of Prof. Anwar Padhani, from "AI - Human Workflows NoteBookLM" podcast.

Read the study here. Also, Prof. Padhani released a new podcast, called "AI - Human Workflows NoteBookLM," on 11 March.